Security teams are trying to wrap yesterday's controls around today's AI agents—and it's not working. After analyzing the emerging threat landscape—particularly the excellent work done by the team at agenticsecurity.info—it's clear that protecting these autonomous AI systems requires an entirely new approach. The gap between product security and security products will only be exaggerated by agentic AI.

Agentic workloads involve autonomous LLM-driven systems that dynamically reason, make decisions, and execute real-world actions across tools and APIs. As AI systems gain autonomy, memory, and tool access, they introduce vulnerabilities that traditional security tools simply cannot address. Even basic LLM integrations—like customer support chatbots that access knowledge bases or process user data—exhibit agentic behaviors that create new attack surfaces.

The Old World vs The New

Traditional application security validates inputs, authorizes requests, and prevents injection attacks. This worked for deterministic applications with predictable code paths and ephemeral state.

AI agents shatter these assumptions. They make autonomous decisions, maintain persistent memory across sessions, and dynamically select tools based on reasoning. Even simple chatbots that remember user preferences exhibit these behaviors. The attack surface isn't just technical—it's cognitive. Adversaries manipulate the agent's goals, poison its memory, and subvert its decision-making process.

The numbers are stark: In 2023, goal manipulation attacks succeeded 88% of the time against production AI systems. A Chevrolet dealership's chatbot was manipulated into offering a $1 Tahoe as a "legally binding" deal. These aren't buffer overflows—they're attacks on reasoning itself.

The OWASP Agentic AI Threats: Attacks on Reasoning Itself

The OWASP Foundation identified the top threats specific to agentic AI systems. The agenticsecurity.info team cataloged these emerging threats, revealing how they target what makes agents powerful: memory poisoning (contaminating long-term memory to influence future decisions), tool misuse (manipulating agents into abusing their legitimate privileges), and goal manipulation (redirecting what the agent believes it's trying to achieve).

These aren't theoretical. DPD's chatbot was tricked into criticizing its own company. Slack's AI leaked data from private channels through prompt injection. The Chevrolet dealership incident showed how agents can be manipulated into making unauthorized commitments that bypass business rules.

Why Traditional Security Tools Fail

Static analysis tools search for known patterns—SQL concatenation, command injection, hardcoded secrets. But SAST and DAST assume your logic is in code, while agents decide via prompt context and real-time reasoning based on current context. The probabilistic nature of AI models means you can't statically analyze an agent's future decisions or detect when a memory update will influence behavior three conversations later.

Runtime security tools monitor API calls and network traffic. But agent vulnerabilities manifest in the reasoning layer—the gap between receiving input and taking action. Consider a financial agent that processes a loan application: malicious input during the "reasoning" phase could corrupt its risk assessment, but by the time this translates to the API call approving the loan, the damage is done.

Policy engines like OPA enforce rules on API calls, but they can't see the decision graph behind them. They validate the "what" but miss the "why" and "how" of agent reasoning. LLM security tools focus on prompt injection and jailbreaking but miss the unique threats that emerge when LLMs become agents with memory, goals, and tool access.

A New Approach: Policy-Based Security for Agent Systems

The key insight is that agent threats require agent controls—policies that govern reasoning and intent, not just access and execution. Instead of trying to retrofit traditional tools for agentic systems, we need security primitives designed specifically for systems that think, remember, and make autonomous decisions.

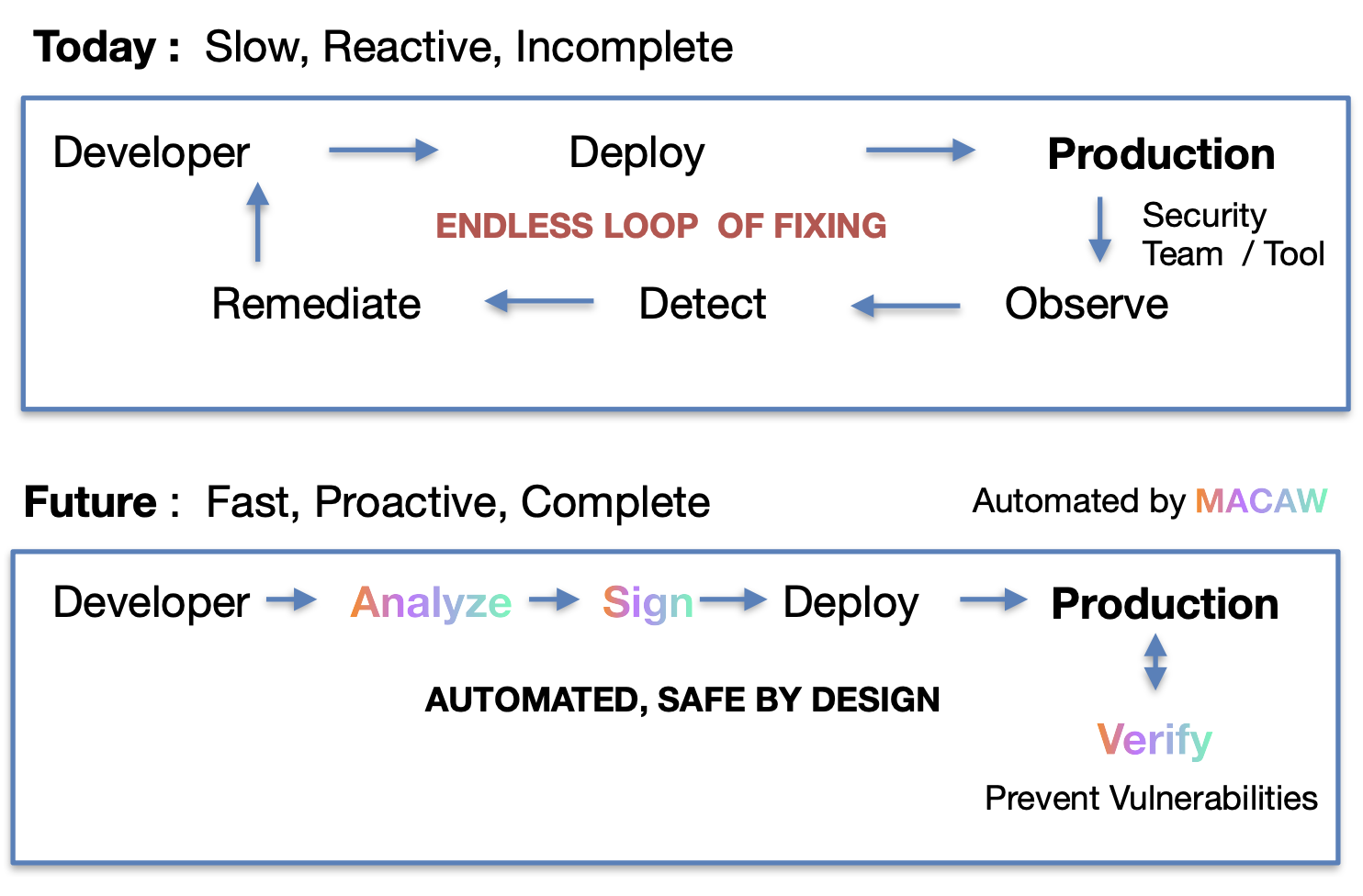

At MACAW, we're exploring a policy-based approach that fundamentally shifts from reactive "detect and respond" to proactive "prove and ensure." Traditional security operates on "trust and verify"—allow operations to proceed, then monitor for problems. But with autonomous agents making thousands of decisions per minute, verification after the fact isn't enough.

Consider what protection might look like for memory operations vulnerable to poisoning:

python

def update_memory(new_preferences):

# We want to keep user_preferences protected from tampering

# But there's no way to do this with traditional security tools

preferences = extract_from_conversation(chat_history)

agent.memory['user_preferences'] = preferences

These examples show the right direction, but manually writing these protections for every agent function is impractical. What we really need is rigorous program analysis that can automatically identify vulnerable patterns and generate the exact protections needed.

This is where our approach becomes unique. We've built a system that analyzes agent codebases, identifies vulnerable patterns across memory operations, tool access, and goal management, then auto-generates comprehensive protection policies.

And here's the breakthrough: we've made it incredibly simple for developers. All that complex analysis and policy generation happens behind the scenes. For the developer, protection becomes as simple as adding one decorator:

python

@secure # This single line activates comprehensive protection

def process_user_request(chat_history, user_input):

# Automatically protected against:

# - Memory poisoning through authenticated context

# - Tool misuse through policy enforcement

# - Goal manipulation through workflow attestation

agent.memory['user_preferences'] = extract_from_conversation(chat_history)

result = agent.execute_tool('database_query', user_input)

return result

For developers who need more granular control, they can be more prescriptive:

python

@secure(

allowed_tools=['search', 'calculator'],

context_authenticated=['user_preferences'], # New capability we've introduced

tool_verifier='ToolAuthorizationVerifier',

parameter_validator='SafeParameterValidator'

)

def execute_tool(tool_name, params):

# Only whitelisted tools can execute

# Parameters are validated before use

# Anomalous usage patterns trigger alerts

# Memory states are cryptographically protected

return agent.invoke_tool(tool_name, params)

The key insight here is that product security IS NOT a security product. Too many vendors try to sell the latter—another dashboard, another scanner, another monitoring tool. But what security practitioners actually need is security built into the development process itself. Security has to be a forethought, not an afterthought, and we can't assume all programmers are security engineers. We need to help them build secure systems by default.

Traditional security creates an endless cycle of reactive fixes. Agentic AI requires a fundamentally different approach that integrates security analysis and cryptographic verification directly into the development workflow, preventing vulnerabilities before they reach production.

From Detection to Protection: Comprehensive Agent Security

While memory poisoning is one critical threat we address, our platform handles the full spectrum of agentic vulnerabilities. The same program analysis that identifies vulnerable memory patterns also detects:

- Unprotected tool invocations that enable privilege escalation

- Mutable goal states that allow objective manipulation

- Unvalidated context accumulation leading to cascading decision corruption

- Insecure workflow transitions that break execution integrity

Unlike traditional scanners that merely report vulnerabilities, our system generates complete protection code—the exact policies, verifiers, and enforcement mechanisms needed to prevent each specific attack pattern. This code generation capability is what makes the approach practical at scale.

The technical foundation draws from authenticated system calls research, where every critical operation carries cryptographic proof of authorization. Applied to agents, this means every memory update, tool invocation, and goal modification is cryptographically bound to policies that govern that specific operation.

Real-World Impact and Industry Response

This isn't academic theory. Every organization deploying AI agents faces these threats today. Customer service agents with poisoned memories provide incorrect information. Financial agents with manipulated goals approve fraudulent transactions. Healthcare agents with compromised tool access leak sensitive patient data.

The security community is beginning to respond. Companies like Robust Intelligence, HiddenLayer, and others are building LLM-specific security tools. Research groups are exploring formal verification for AI systems. But most current solutions focus on model-level protections rather than agent-level governance.

We're working on policy-based approaches that treat the agent's cognitive architecture as a first-class security concern. By analyzing agent code and automatically generating security policies, we believe it's possible to transform vulnerable autonomous systems into hardened, trustworthy agents. The same agentic capabilities that create risk—memory, tools, goals—can become secure building blocks for enterprise AI.

The Path Forward

The agentic revolution is happening now. Companies are deploying AI systems for customer service, financial analysis, and critical infrastructure management—from sophisticated autonomous agents to basic chatbots that remember context and access tools. These systems are making decisions that affect real people and real business outcomes.

The choice isn't whether to deploy agents—it's whether to deploy them securely. Traditional security approaches, designed for predictable applications, cannot protect systems that reason, remember, and act autonomously. We need security that matches the sophistication of the systems we're building.

The early movers who solve agent security will have a massive competitive advantage. They'll be able to deploy more capable, more autonomous agents while their competitors remain constrained by security concerns. The window for establishing this advantage is narrow—and it's open right now.

What security challenges are you seeing with AI agents in production? Are you encountering any of these attack patterns? We're always interested in real-world experiences as we continue developing these security primitives.

References:

- https://www.bbc.com/news/technology-68025677

- https://simonwillison.net/2024/Aug/20/data-exfiltration-from-slack-ai/

Join the MACAW Private Beta

Get early access to cryptographic verification for your AI agents.